AI Content Isn’t Illegal in India, But Hiding It Might Be

The Indian government is about to draw a line in the digital sand — and every creator using AI tools needs to pay attention.

India's Ministry of Electronics and Information Technology is preparing mandatory labeling requirements for AI-generated content. If you've used AI to create an image, edit a video, generate a caption, or modify someone's voice, you'll need to disclose it. Clearly. Visibly. Every single time.

This isn't a vague future concern. With over 100 million creators in India and the country serving as a critical market for global platforms, this regulation will shape how the entire creator economy operates. What starts as an India-specific rule will likely influence platform policies worldwide, affecting creators everywhere.

The motivation is straightforward: deepfakes of politicians, celebrities, and business leaders have exploded. Synthetic content threatens elections, spreads misinformation, and erodes public trust. The government wants control before AI-generated content becomes indistinguishable from reality.

For creators, this means your workflow is about to change. The question isn't whether to adapt — it's how to do it without killing your growth, losing brand deals, or sacrificing the efficiency that AI tools provide.

What the New Guidelines Actually Say

Based on government briefs and policy discussions, here's what creators should expect.

Mandatory disclosure: Every piece of AI-generated or AI-modified content published online must be clearly labeled. The label needs to be visible and easy to understand — no fine print buried in long captions.

Platform responsibility: Instagram, YouTube, X, ShareChat, and every other social platform will need to deploy detection technology for AI-generated media. When someone uploads content, the platform's AI will scan it. If it detects synthetic elements without proper disclosure, the platform must flag it.

Special rules for impersonation: Content that uses AI to manipulate someone's face or voice gets automatic scrutiny. Deepfakes of public figures, especially political deepfakes, will be treated as high-risk content requiring immediate review.

Rapid takedown requirements: Platforms must remove harmful synthetic media within hours, particularly during election cycles. If your AI-generated content gets flagged as misleading or dangerous, it won't sit in a review queue for days — it comes down fast.

Creator accountability: You're responsible for accurate disclosure. Platforms will provide the tools, but you decide what to label and how. Get it wrong consistently, and penalties escalate — especially for political or health-related misinformation.

The expected timeline? Phased implementation throughout 2025 and 2026. Strict enforcement during election periods. If you're a creator in India or creating content for Indian audiences, you have months — not years — to prepare.

What Actually Counts as AI-Generated Content?

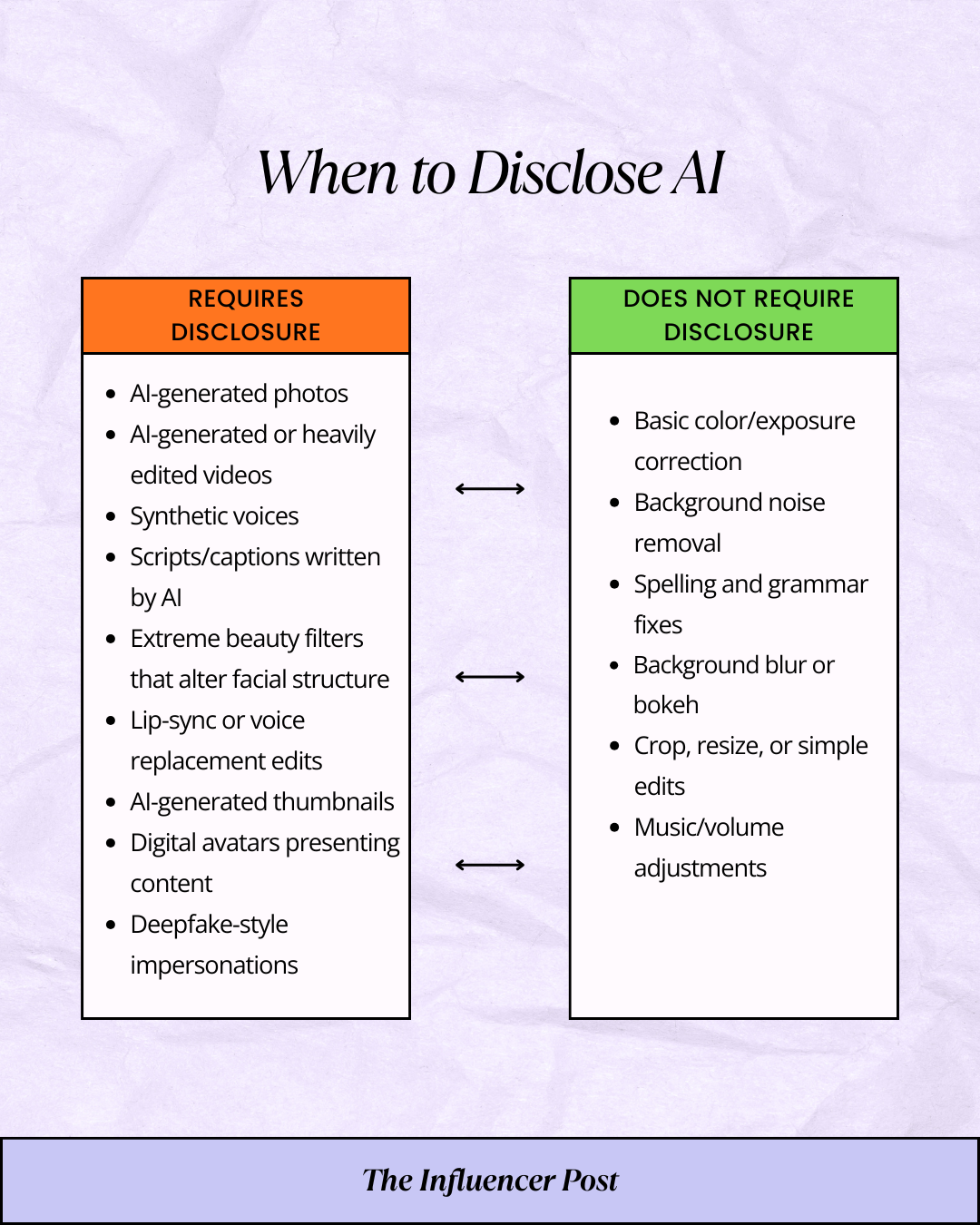

Before you can label something, you need to know what qualifies. The government's definition is still evolving, but the general principle is clear: if AI helped create, alter, or amplify your content in a way that changes meaning, representation, or identity, it probably needs a label.

Here's what that looks like in practice.

Content that almost certainly requires disclosure:

- Photos created with Midjourney, DALL·E, or Ideogram

- Videos generated or heavily edited with Runway, Pika Labs, or similar tools

- Synthetic voices created with ElevenLabs or Resemble AI

- Scripts or captions written by ChatGPT, Gemini, or Claude

- Beauty filters that reshape facial features beyond recognition

- Lip-sync corrections that put different words in someone's mouth

- AI-generated thumbnails

- Digital avatars presenting your content

- Deepfake-style content impersonating politicians, celebrities, or public figures

Content that probably doesn't require disclosure:

- Basic color correction and exposure adjustments

- Background noise removal

- Standard spelling and grammar checks

- Background blur or bokeh effects

- Simple crop and resize operations

- Music volume adjustments

The line gets blurry in the middle. What about Instagram's AI-powered auto-enhance feature? What about using AI to suggest hashtags? What about Canva's AI layout recommendations?

Until the final guidelines are published, creators face uncertainty. The safest approach is simple: when in doubt, disclose. Over-labeling carries minimal risk. Under-labeling could mean compliance violations, content takedowns, or worse — losing audience trust when they discover you weren't transparent.

Every Platform Will Be Affected

This isn't just about Instagram or YouTube. The regulation is platform-agnostic, which means it applies everywhere.

Social platforms: Instagram, Facebook, YouTube, X, Snapchat, LinkedIn, ShareChat, Moj, Josh — all of them will need compliance mechanisms.

Messaging apps: WhatsApp and Telegram might face requirements for forwarded AI-generated media, especially viral content.

Content creation tools: Platforms like Runway, Midjourney, and Pika Labs that generate AI content might need to embed disclosure metadata automatically.

Streaming services: OTT platforms using synthetic actors or AI-generated characters will need to disclose it.

You won't get a personal notice from the Ministry of Electronics. You'll get a notification from Instagram saying your content was flagged, your reach was limited, or your monetization was paused. The platform becomes the enforcer, and you're the one dealing with consequences.

What This Changes for Creators (And It's a Lot)

Let's talk about the practical impact on your day-to-day work.

Your workflow gets more complex. You'll need to add disclosure lines in captions, watermarks on images, on-screen labels in videos, and metadata tags in files. What used to be "edit, export, upload" becomes "edit, export, label, verify, upload."

Platforms will demote unlabeled content. To avoid regulatory trouble, Instagram and YouTube will build aggressive auto-detection. If their AI thinks your content is synthetic and you didn't label it, they'll push it down in algorithms. Less reach, fewer views, lower engagement.

Brand partnerships will demand proof. Expect partnership contracts to include clauses like: "Provide raw files for all content. Disclose any AI tools used. Confirm no AI-generated models without prior approval." Brands don't want to discover after publication that their campaign used undisclosed AI content.

News and political creators face maximum scrutiny. If you cover policy, elections, finance, or health, expect your content to be flagged more aggressively. AI-manipulated political content is the government's primary concern. One mistake in this space could end your ability to create content in that niche.

Trust becomes a differentiator. In a market where everyone is required to disclose AI use, the creators who do it most transparently and authentically will stand out. Your audience will notice who seems honest versus who seems like they're disclosing only because they have to.

Micro-creators lose their speed advantage. One reason AI tools leveled the playing field was speed. A solo creator could produce content that looked professionally designed, edited, and polished. If mandatory labeling makes audiences perceive AI-assisted content as less valuable, that advantage shrinks.

How to Keep Making Money While Following the Rules

Here's the good news: AI disclosure doesn't kill monetization. It just changes the strategy.

What AI can still handle without harming your brand:

- Writing first drafts, outlines, and scripts (with human editing)

- Keyword research and topic analysis

- Color correction and audio enhancement

- Thumbnail design and graphics

- B-roll footage and background visuals

- Non-impersonation voiceovers

- Subtitles and captions

- Intro and outro animations

- Workflow automation

These tasks speed up production without replacing your creative voice. Disclose them, and most audiences won't care. Brands understand efficiency.

What humans must handle to stay profitable:

- On-camera presence and personality

- Key narration and message delivery

- Personal opinions, analysis, and insights

- Sponsored content talking points

- Emotional storytelling and vulnerability

- Authenticity checks before posting

- Original footage and genuine reactions

Sponsors don't pay for your editing skills. They pay for your credibility, your voice, your face, your connection with an audience. Keep those elements human, and monetization survives.

Where the real money will flow:

- Transparent creators who openly show "human + AI hybrid workflows"

- Creators who maintain human essence while using AI for efficiency

- Verified creators who pass platform detection easily

- Niches like finance, law, health, policy — where human expertise is non-negotiable

The creators who win won't be the ones avoiding AI entirely. They'll be the ones using it strategically and communicating that strategy honestly.

The Questions Everyone's Asking

Do Instagram filters count as AI content?

If they significantly change facial structure, skin tone, or body shape beyond what makeup could achieve, they likely qualify. A simple color adjustment? Probably fine. A filter that makes you look like a different person? That needs disclosure.

Do AI voiceovers need labels?

Yes, if they replace your actual voice. If you're using ElevenLabs to create narration because you're camera-shy, disclose it. If you're just using AI to remove background noise from your real voice, you're probably okay.

Does ChatGPT-written text require disclosure?

It depends on how much you rely on it. If ChatGPT writes a full caption and you post it verbatim, safer to label it. If you use it to brainstorm ideas and then write the caption yourself, that's likely fine. The question is: did AI create the final content or assist your process?

What about AI-generated thumbnails?

Yes, they need disclosure. Thumbnails are content. If an AI tool created the image, say so.

Can you still make money from AI-generated content?

Absolutely. Disclose it, keep your human elements strong, and brands will still work with you. What kills monetization is undisclosed AI use that damages trust.

Will platforms automatically flag my content?

Very likely. Instagram, YouTube, and TikTok are all testing or implementing AI detection systems. Expect automated flags for synthetic media.

What if I only partially used AI?

Partial AI assistance still requires disclosure. Better to over-communicate than under-communicate.

Is this law final?

Not yet, but enforcement is expected throughout 2025 and 2026. Treat it as imminent, not theoretical.

Why This Matters

India's AI labeling requirement is part of a larger global shift. The European Union has similar rules. China implemented disclosure requirements in 2023. California is legislating AI content transparency. This isn't an isolated policy — it's the beginning of a new standard.

For creators, the message is clear: the era of invisible AI assistance is ending. Transparency is becoming mandatory. The creators who adapt early, build disclosure into their workflow, and communicate honestly with audiences will have a massive advantage over those who wait.

AI won't disappear from content creation. It's too useful, too efficient, too powerful. But how we use it and how we talk about using it is changing. The creators who get ahead of this shift will define what responsible AI-assisted content creation looks like.

TIP Insight

AI will make you faster, but transparency will make you trusted. As regulations tighten globally, the creator economy will reward those who combine human storytelling with AI efficiency — without hiding it. Start building disclosure habits now, before platforms enforce them. Audit your tools, create clear labeling systems, and test audience response to transparency. The creators who adapt early will avoid penalties, win brand trust, and differentiate themselves in an ecosystem where authenticity becomes the real currency. Don't wait for enforcement to force your hand. The competitive advantage goes to those who prepare today, not those who scramble tomorrow.